Snapchat’s augmented reality dreams might be starting to look a bit more realistic.

The company has been subtly improving its AR-powered Lenses every year, improving the technical odds-and-ends and strengthening its dev platform. The result is that today, more than 170 million people — over three-quarters of Snap’s daily active users — access the app’s augmented reality features on a daily basis, the company says. Two years ago, Snap shared that creators had designed over 100,000 lenses on the platform; now Snap says there have been more than 1 million lenses created.

The goofy filters are bringing users to the app and the company is slowly building a more interconnected platform around augmented reality that is beginning to look more and more promising.

Today, at Snap’s annual developer event, the company announced a series of updates, including Lens voice search, a bring-your-own machine learning model update to Lens Studio and a geography-specific AR system that will turn public Snaps into spatial data that the company can use to three-dimensionally map huge physical spaces.

An Alexa for AR

Snapchat’s Lens carousel was sufficient for swiping between filters when there were only a couple dozen to piece through, but with one million Lenses and counting, it’s always been clear that Snapchat’s AR ambitions were suffering due to issues with discoverability.

Snap is preparing to roll out a new method of sorting through Lenses, via voice, and if they can nail it, the company will have a clear pathway for transition from entertainment-only AR to a platform based around utility. In its current format, the app’s new voice search will allow Snapchat users to ask the app to help it surface filters that enable them to do something unique.

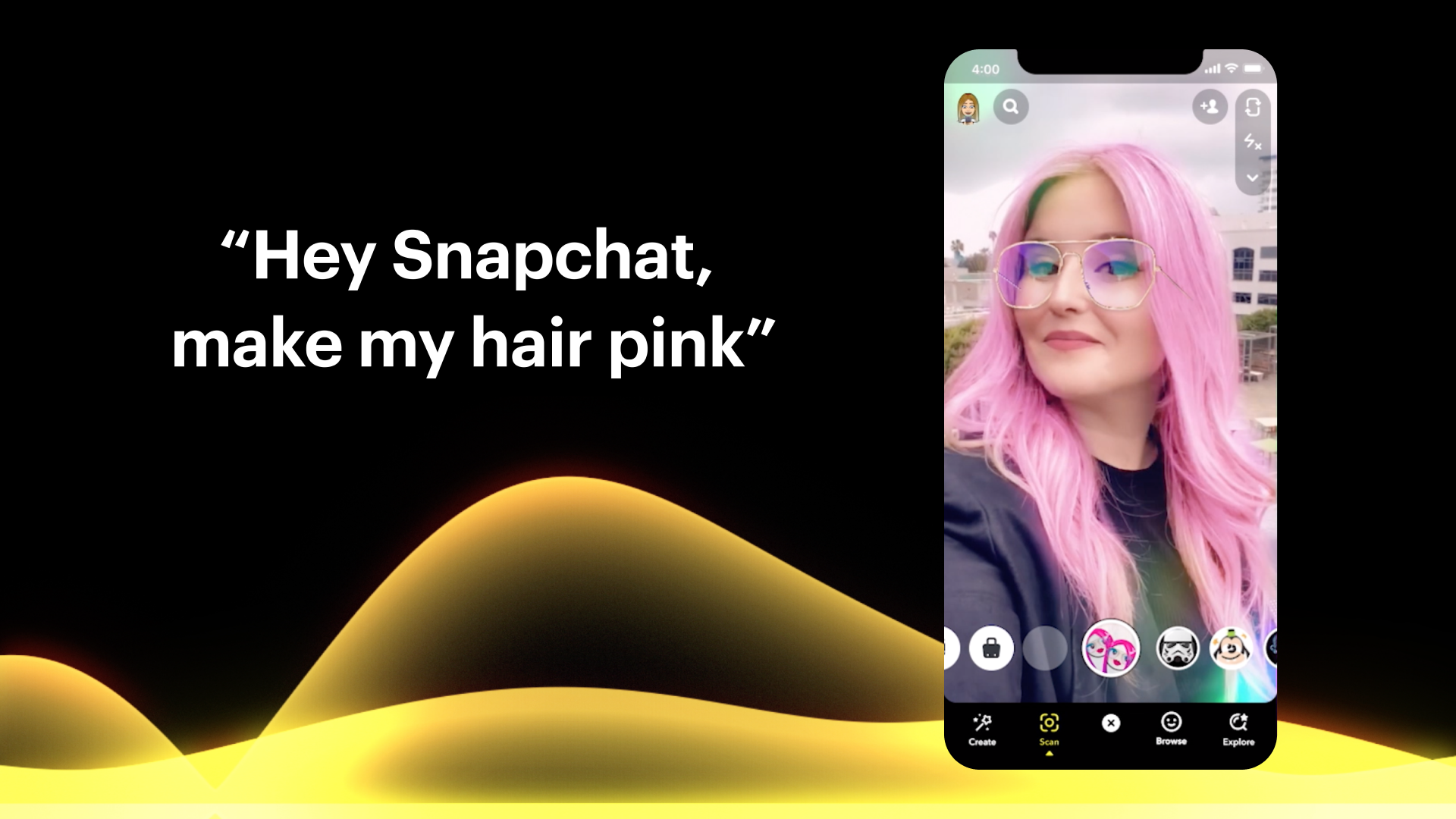

While it’s easy to see where a feature like this could go if users rallied around it, the examples highlighted in a press pre-briefing didn’t exactly indicate that Snapchat wants this to feel like a digital assistant right out of the gate:

- “Hey Snapchat, make my hair pink”

- “Hey Snapchat, give me a hug!

- “Hey Snapchat, take me to the moon”

It’ll be interesting to see whether users access this functionality at all early-on when the capabilities of Lenses are all over the place, but just building this infrastructure into the app seems powerful, especially when you look at the company’s partnerships for visual search with Amazon and audio search with Shazam inside its Scan feature. It’s not hard to imagine asking the app to let you try on makeup from a specific company or ask it to show you what a 55″ TV would look like on your wall.

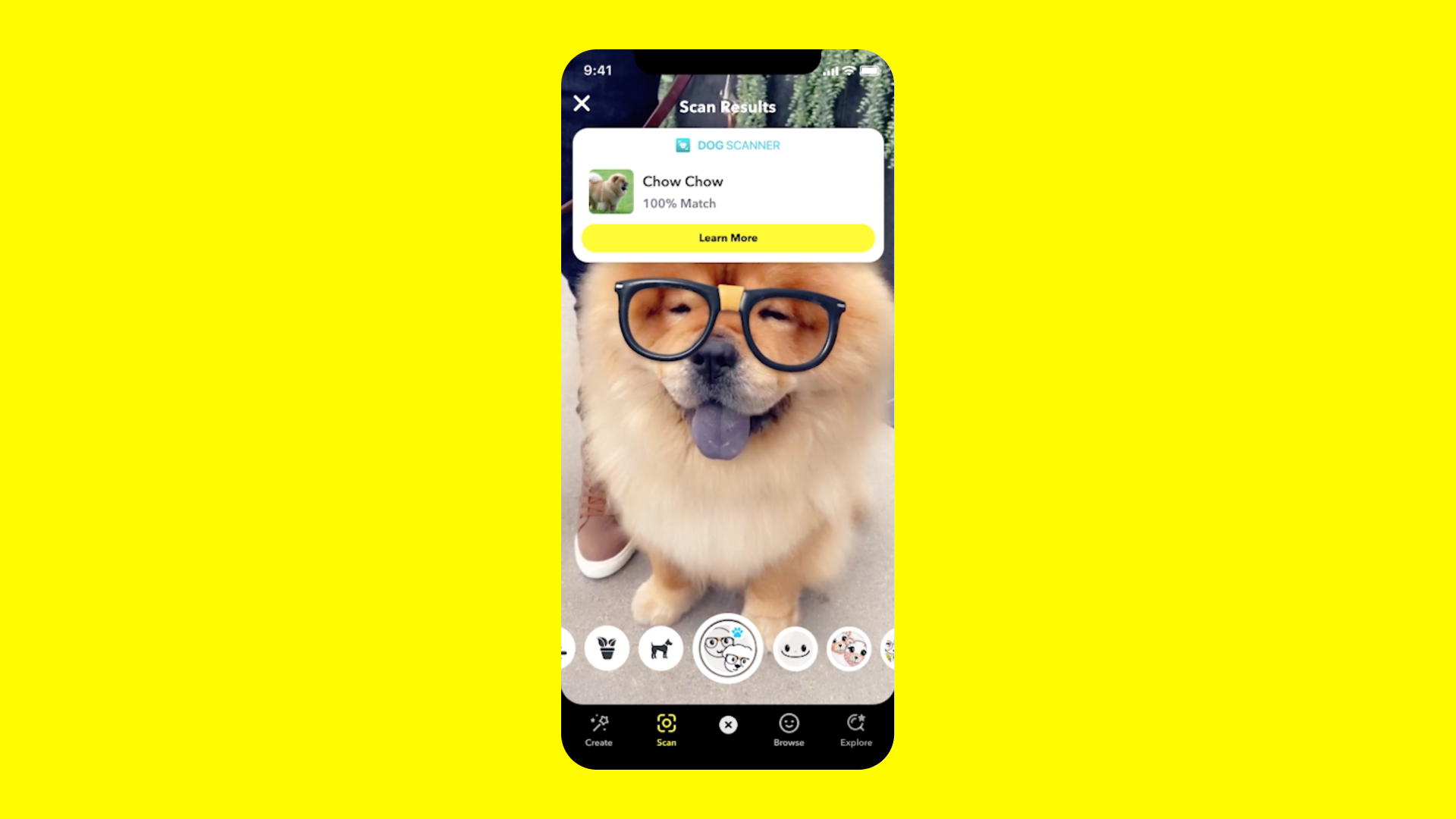

The company announced new partnerships for its visual search, teaming with PlantSnap to help Snapchat users identify plants and trees, Dog Scanner to let Snap users point their camera at a dog and determine its breed, and later this year with Yuka to help give nutrition ratings on food after you scan an item’s label.

“Today, augmented reality is changing how we talk with our friends,” Snap co-founder and CTO Bobby Murphy said in a press briefing. “But in the future, we’ll use it to see the world in all-new ways.”

BYO[AI]

Snap wants developers to bring their own neural net models to their platform to enable a more creative and machine learning-intensive class of Lenses. SnapML allows users to bring in trained models and let users augment their environment, creating visual filters that transform scenes in more sophisticated ways.

The data sets that creators upload to Lens Studio will allow their Lenses to see with a new set of eyes and search out new objects. Snap is partnering with AR startup Wannaby to give developers access to their foot-tracking tech to enable lenses that allow users to try on sneakers virtually. Another partnership with Prisma allows the Lens camera to filter the world in the style of familiar artistic styles.

Snap hopes that by pairing the machine learning community and the creative community, users will be able to gain access to something entirely new. “We’re hoping to see a completely new type of lenses that we’ve never seen before,” Snap AR exec Eitan Pilipski told TechCrunch.

Snapchat starts mapping the world

One of last year’s big announcements from Snap on the AR front was a feature called Landmarkers, which allowed developers to create more sophisticated Lenses that leveraged geometric models of popular large landmark structures like the Eiffel Tower in Paris or Flatiron Building in NYC to make geography-specific lenses that played with the real world.

The tech was relatively easy to pull off, if only because the structures they chose were so ubiquitous and 3D files of their exteriors were readily available. The company’s next AR effort is a bit more ambitious. A new feature called Local Lenses will allow Snapchat developers to create geography-specific lenses that interact with a wider swatch of physical locations.

“[W]e’re taking it a step further, by enabling shared and persistent augmented reality in much larger areas, so you can experience AR with your friends, at the same time, across entire city blocks,” Murphy said.

How will Snapchat get all of this 3D data in the first place? They’re analyzing public Snaps that were shared by users to the company’s public Our Story feed, extracting visual data about the buildings and structures in photos and using them to create more accurate 3D maps of locations.

Companies that are interested in augmented reality are increasingly racing to collect 3D data. Last month, Pokémon GO maker Niantic announced that they were going to begin collecting 3D data from users on an opt-in basis.

source https://techcrunch.com/2020/06/11/snapchat-boosts-its-ar-platform-with-voice-search-local-lenses-and-snapml/

No comments:

Post a Comment