Eye-witness photos and videos distributed by news wire Reuters already go through an exhaustive media verification process. Now the publisher will bring that expertise to the fight against misinformation on Facebook. Today it launches the new Reuters Fact Check business unit and blog, announcing that it will become one of the third-party partners tasked with debunking lies spread on the social network.

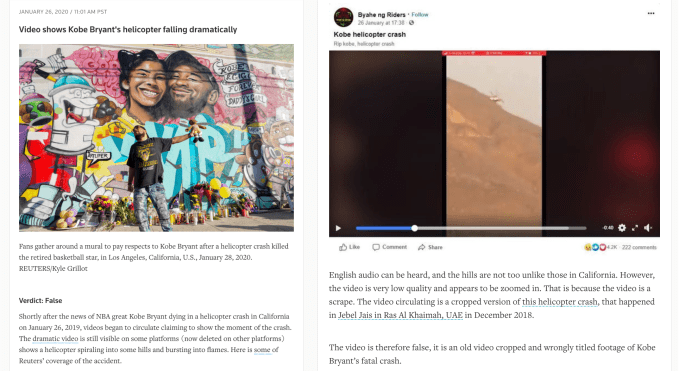

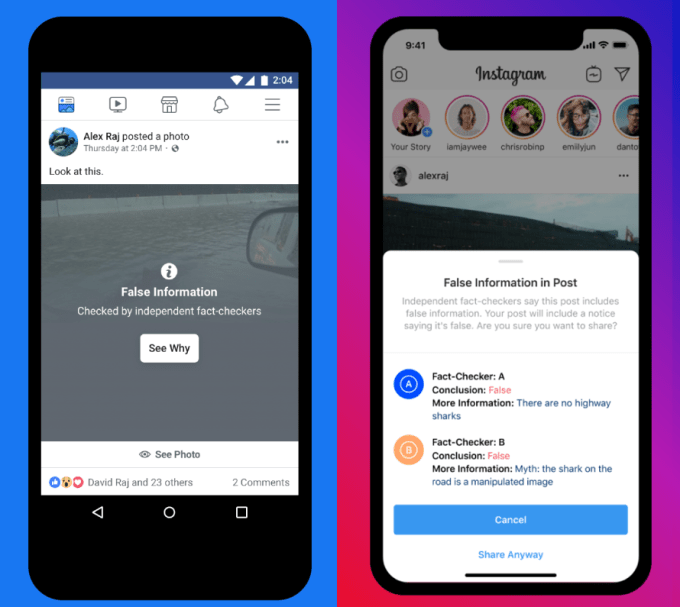

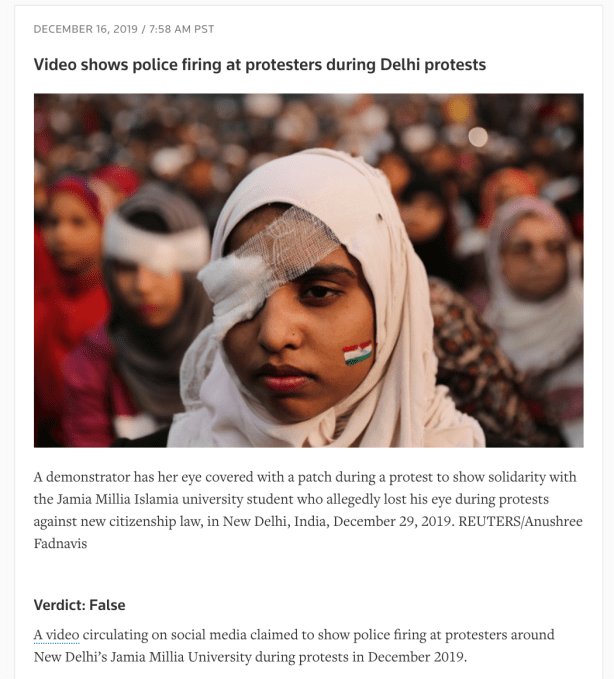

The four-person team from Reuters will review user generated video and photos as well as news headlines and other content in English and Spanish submitted by Facebook or flagged by the wider Reuters editorial team. They’ll then publish their findings on the new Reuters Fact Check blog, listing the core claim and why it’s false, partially false, or true. Facebook will then use those conclusions to label misinformation posts as false and downrank them in the News Feed algorithm to limit their spread.

“I can’t disclose any more about the terms of the financial agreement but I can confirm that they do pay for this service” Reuter’s Director of Global Partnerships Jessica April tells me of the deal with Facebook. Reuters joins the list of US fact-checking partners that include The Associated Press, PolitiFact, Factcheck.org, and four others. Facebook offers fact-checking in over 60 countries, though often with just one partner like Agence France-Presse’s local branches.

Reuters will have two fact-checking staffers in Washington D.C. and two in Mexico City. For reference, Reuters has over 25,000 employees. Reuters’ Global Head of UGC Newsgathering Hazel Baker said the fact-checking team could grow over time, as it plans to partner with Facebook through the 2020 election and beyond. The fact checkers will operate separately from, though with learnings gleaned from, the 12-person media verification team.

Reuters Fact Check will review content across the spectrum of misinformation formats. “We have a scale. On one end is content that is not manipulated but has lost context — old and recycled videos” Baker tells me, referencing lessons from the course she co-authored on spotting misinfo. Next up the scale are simplistically edited photos and videos that might be slowed down, sped up, spliced, or filtered. Then there’s staged media that’s been acted out or forged, like an audio clip recorded and maliciously attributed to a politician. Next is computer-generated imagery that can concoct content or ad fake things to a real video. “And finally there is synthetic or Deepfake video” which Baker said takes the most work to produce.

Baker acknowledged criticism of how slow Facebook is to direct hoaxes and misinformation to fact-checkers. While Facebook claims it can reduce the further spread of this content by 80% using downranking once content is deemed false, that doesn’t account for all the views it gets before its submitted and fact-checkers reach it amongst deep queues of suspicious posts for them to moderate. “One thing we have as an advantage of Reuters is an understanding of the importance of speed” Baker insists. That’s partly why the team will review content Reuters chooses, not just what Facebook has submitted.

Unfortunately, one thing they won’t be addressing is the widespread criticism over Facebook’s policy of refusing to fact-check political ads, even if they combine sensational and defamatory misinformation paired with calls to donate to a campaign. “We wouldn’t comment on that Facebook policy. That’s ultimately up to them” Baker tells TechCrunch. We’ve called on Facebook to ban political ads, fact-check them or at least those from presidential candidates, limit microtargeting, and/or only allow campaign ads using standardized formats without room for making potentially misleading claims.

The problem of misinformation looms large as we enter the primaries ahead of the 2020 election. Rather than just being financially motivated, anyone from individual trolls to shady campaigns to foreign intelligence operatives can find political incentives for mucking with democracy. Ideally, an organization with the experience and legitimacy of Reuters would have the funding to put more than four staffers to work protecting hundreds of millions of Facebook users.

Unfortunately, Facebook is straining its bottom line to make up for years of neglect around safety. Big expenditures on content moderators, security engineers, and policy improvements depressed its net income growth from 61% year-over-year at the end of 2018 to just 7% as of last quarter. That’s a quantified commitment to improvement. Yet clearly the troubles remain.

Facebook spent years crushing its earnings reports with rapid gains in user count, revenue, and profits. But it turns out that what looked like incredible software-powered margins were propped up by an absence on spending on safeguards. The sudden awakening to the price of protecting users has hit other tech companies like Airbnb, which the Wall Street Journal reports fells from from a $200 million in yearly profit in late 2018 to a loss of $332 million a year later as it combats theft, vandalism, and discrimination.

Paying Reuters to help is another step in the right direction for Facebook that’s now two years into its fact-checking foray. It’s just too bad it started so far behind.

source https://techcrunch.com/2020/02/12/reuters-facebook-fact-checker/

No comments:

Post a Comment