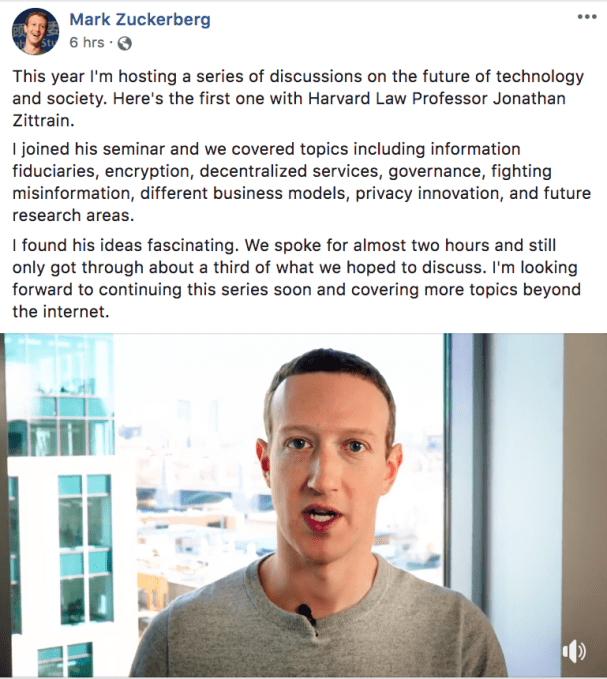

Mark Zuckerberg says it might be right for Facebook to let people pay to not see ads, but that it would feel wrong to charge users for extra privacy controls. That’s just one of the fascinating philosophical views the CEO shared during the first of his public talks he’s promised as part of his 2019 personal challenge.

Talking to Harvard Law and computer science professor Jonathan Zittrain on the campus of the university he dropped out of, Zuckerberg managed to escape the 100-minute conversation with just a few gaffes. At one point he said “we definitely don’t want a society where there’s a camera in everyone’s living room watching the content of those conversations”. Zittrain swiftly reminded him that’s exactly what Facebook Portal is, and Zuckerberg tried to deflect by saying Portal’s recordings would be encrypted.

Later Zuckerberg mentioned “the ads, in a lot of places are not even that different from the organic content in terms of the quality of what people are being able to see” which is pretty sad and derisive assessment of the personal photos and status updates people share. And when he suggested crowdsourced fact-checking, Zittrain chimed in that this could become an avenue for “astroturfing” where mobs of users provide purposefully biased information to promote their interests, like a political group’s supporting voting that their opponents’ facts are lies. While sometimes avoiding hard stances on questions, Zuckerberg was otherwise relatively logical and coherent.

Policy And Cooperating With Governments

The CEO touched on his borderline content policy that quietly demotes posts that come close to breaking its policy against nudity, hate speech etc that otherwise are the most sensational and get the most distribution but don’t make people feel good. Zuckerberg noted some progress here, saying “a lot of the things that we’ve done in the last year were focused on that problem and it really improves the quality of the service and people appreciate that.”

On working with governments, Zuckerberg explained how incentives weren’t always aligned, like when law enforcement is monitoring someone accidentally dropping clues about their crimes and collaborators. The government and society might benefit from that continued surveillance but Facebook might want to immediately suspend the account if it found out. “But as you build up the relationships and trust, you can get to that kind of a relationship where they can also flag for you, ‘Hey, this is where we’re at'”, implying Facebook might purposefully allow that person to keep incriminating themselves to assist the authorities.

But disagreements between governments can flare up, Zuckerberg notes that “we’ve had employees thrown in jail because we have gotten court orders that we have to turnover data that we wouldn’t probably anyway, but we can’t because it’s encrypted.” That’s likely a reference to the 2016 arrest of Facebook’s VP for Latin Amercia Diego Dzodan over WhatsApp’s encryption preventing the company from providing evidence for a drug case.

Decentralizing Facebook

The tradeoffs of encryption and decentralization were a central theme. He discussed how while many people fear how encryption could mask illegal or offensive activity, Facebook doesn’t have to peek at someone’s actual content to determine they’re violating policy. “One of the — I guess, somewhat surprising to me — findings of the last couple of years of working on content governance and enforcement is that it often is much more effective to identify fake accounts and bad actors upstream of them doing something bad by patterns of activity rather than looking at the content” Zuckerberg said.

With Facebook rapidly building out a blockchain team to potentially launch a cryptocurrency for fee-less payments or an identity layer for decentralized applications, Zittrain asked about the potential for letting users control which other apps they give their profile information to without Facebook as an intermediary.

SAN JOSE, CA – MAY 01: Facebook CEO Mark Zuckerberg (Photo by Justin Sullivan/Getty Images)

Zuckerberg stressed that at Facebook’s scale, moving to a less efficient distributed architecture would be extremely “computationally intense” though it might eventually be possible. Instead, he said “One of the things that I’ve been thinking about a lot is a use of blockchain that I am potentially interesting in– although I haven’t figured out a way to make this work out, is around authentication and bringing– and basically granting access to your information and to different services. So, basically, replacing the notion of what we have with Facebook Connect with something that’s fully distributed.” This might be attractive to developers who would know Facebook couldn’t cut them off from the users.

The problem is that if a developer was abusing users, Zuckerberg fears that “in a fully distributed system there would be no one who could cut off the developers’ access. So, the question is if you have a fully distributed system, it dramatically empowers individuals on the one hand, but it really raises the stakes and it gets to your questions around, well, what are the boundaries on consent and how people can really actually effectively know that they’re giving consent to an institution?”

No “Pay For Privacy”

But perhaps most novel and urgent were Zuckerberg’s comments on the secondary questions raised by where Facebook should let people pay to remove ads. “You start getting into a principle question which is ‘are we going to let people pay to have different controls on data use than other people?’ And my answer to that is a hard no.” Facebook has promised to always operate free version so everyone can have a voice. Yet some including myself have suggested that a premium ad-free subscription to Facebook could help ween it off maximizing data collection and engagement, though it might break Facebook’s revenue machine by pulling the most affluent and desired users out of the ad targeting pool.

“What I’m saying is on the data use, I don’t believe that that’s something that people should buy. I think the data principles that we have need to be uniformly available to everyone. That to me is a really important principle” Zuckerberg expands. “It’s, like, maybe you could have a conversation about whether you should be able to pay and not see ads. That doesn’t feel like a moral question to me. But the question of whether you can pay to have different privacy controls feels wrong.”

Back in May, Zuckerberg announced Facebook would build a Clear History button in 2018 that deletes all the web browsing data the social network has collected about you, but that data’s deep integration into the company’s systems has delayed the launch. Research suggests users don’t want the inconvenience of getting logged out of all their Facebook Connected services, though, they’d like to hide certain data from the company.

“Clear history is a prerequisite, I think, for being able to do anything like subscriptions. Because, like, partially what someone would want to do if they were going to really actually pay for a not ad supported version where their data wasn’t being used in a system like that, you would want to have a control so that Facebook didn’t have access or wasn’t using that data or associating it with your account. And as a principled matter, we are not going to just offer a control like that to people who pay.”

Of all the apologies, promises, and predictions Zuckerberg has made recently, this pledge might instill the most confidence. While some might think of Zuckerberg as a data tyrant out to absorb and exploit as much of our personal info as possible, there are at least lines he’s not willing to cross. Facebook could try to charge you for privacy, but it won’t. And given Facebook’s dominance in social networking and messaging plus Zuckerberg’s voting control of the company, a greedier man could make the internet much worse.

—

TRANSCRIPT – MARK ZUCKERBERG AT HARVARD / FIRST PERSONAL CHALLENGE 2019

Jonathan Zittrain: Very good. So, thank you, Mark, for coming to talk to me and to our students from the Techtopia program and from my “Internet and Society” course at Harvard Law School. We’re really pleased to have a chance to talk about any number of issues and we should just dive right in. So, privacy, autonomy, and information fiduciaries.

Mark Zuckerberg: All right!

Jonathan Zittrain: Love to talk about that.

Mark Zuckerberg: Yeah! I read your piece in The New York Times.

Jonathan Zittrain: The one with the headline that said, “Mark Zuckerberg can fix this mess”?

Mark Zuckerberg: Yeah.

Jonathan Zittrain: Yeah.

Mark Zuckerberg: Although that was last year.

<laughter>

Jonathan Zittrain: That’s true! Are you suggesting it’s all fixed?

<laughter>

Mark Zuckerberg: No. No.

<laughter>

Jonathan Zittrain: Okay, good. So–

Jonathan Zittrain: I’m suggesting that I’m curious whether you still think that we can fix this mess?

Jonathan Zittrain: Ah! <laughter>

Jonathan Zittrain: I hope– <laughter>

Jonathan Zittrain: “Hope springs eternal”–

Mark Zuckerberg: Yeah, there you go.

Jonathan Zittrain: –is my motto. So, all right, let me give a quick characterization of this idea that the coinage and the scaffolding for it is from my colleague, Jack Balkin, at Yale. And the two of us have been developing it out further. There are a standard number of privacy questions with which you might have some familiarity, having to do with people conveying information that they know they’re conveying or they’re not so sure they are, but “mouse droppings” as we used to call them when they run in the rafters of the Internet and leave traces. And then the standard way of talking about that is you want to make sure that that stuff doesn’t go where you don’t want it to go. And we call that “informational privacy”. We don’t want people to know stuff that we want maybe our friends only to know. And on a place like Facebook, you’re supposed to be able to tweak your settings and say, “Give them to this and not to that.” But there’s also ways in which stuff that we share with consent could still sort of be used against us and it feels like, “Well, you consented,” may not end the discussion. And the analogy that my colleague Jack brought to bear was one of a doctor and a patient or a lawyer and a client or– sometimes in America, but not always– a financial advisor and a client that says that those professionals have certain expertise, they get trusted with all sorts of sensitive information from their clients and patients and, so, they have an extra duty to act in the interests of those clients even if their own interests conflict. And, so, maybe just one quick hypo to get us started. I wrote a piece in 2014, that maybe you read, that was a hypothetical about elections in which it said, “Just hypothetically, imagine that Facebook had a view about which candidate should win and they reminded people likely to vote for the favored candidate that it was Election Day,” and to others they simply sent a cat photo. Would that be wrong? And I find– I have no idea if it’s illegal; it does seem wrong to me and it might be that the fiduciary approach captures what makes it wrong.

Mark Zuckerberg: All right. So, I think we could probably spend the whole next hour just talking about that! <laughter>

Mark Zuckerberg: So, I read your op-ed and I also read Balkin’s blogpost on information fiduciaries. And I’ve had a conversation with him, too.

Jonathan Zittrain: Great.

Mark Zuckerberg: And the– at first blush, kind of reading through this, my reaction is there’s a lot here that makes sense. Right? The idea of us having a fiduciary relationship with the people who use our services is kind of intuitively– it’s how we think about how we’re building what we’re building. So, reading through this, it’s like, all right, you know, a lot of people seem to have this mistaken notion that when we’re putting together news feed and doing ranking that we have a team of people who are focused on maximizing the time that people spend, but that’s not the goal that we give them. We tell people on the team, “Produce the service–” that we think is going to be the highest quality that– we try to ground it in kind of getting people to come in and tell us, right, of the content that we could potentially show what is going to be– they tell us what they want to see, then we build models that kind of– that can predict that, and build that service.

Jonathan Zittrain: And, by the way, was that always the case or–

Mark Zuckerberg: No.

Jonathan Zittrain: –was that a place you got to through some course adjustments?

Mark Zuckerberg: Through course adjustments. I mean, you start off using simpler signals like what people are clicking on in feed, but then you pretty quickly learn, “Hey, that gets you to local optimum,” right? Where if you’re focusing on what people click on and predicting what people click on, then you select for click bait. Right? So, pretty quickly you realize from real feedback, from real people, that’s not actually what people want. You’re not going to build the best service by doing that. So, you bring in people and actually have these panels of– we call it “getting to ground truth”– of you show people all the candidates for what can be shown to them and you have people say, “What’s the most meaningful thing that I wish that this system were showing us? So, all this is kind of a way of saying that our own self image of ourselves and what we’re doing is that we’re acting as fiduciaries and trying to build the best services for people. Where I think that this ends up getting interesting is then the question of who gets to decide in the legal sense or the policy sense of what’s in people’s best interest? Right? So, we come in every day and think, “Hey, we’re building a service where we’re ranking newsfeed trying to show people the most relevant content with an assumption that’s backed by data; that, in general, people want us to show them the most relevant content. But, at some level, you could ask the question which is “Who gets to decide that ranking newsfeed or showing relevant ads?” or any of the other things that we choose to work on are actually in people’s interest. And we’re doing the best that we can to try to build the services [ph?] that we think are the best. At the end of the day, a lot of this is grounded in “People choose to use it.” Right? Because, clearly, they’re getting some value from it. But then there are all these questions like you say about, you have– about where people can effectively give consent and not.

Jonathan Zittrain: Yes.

Mark Zuckerberg: So, I think that there’s a lot of interesting questions in this to unpack about how you’d implement a model like that. But, at a high level I think, you know, one of the things that I think about in terms of we’re running this big company; it’s important in society that people trust the institutions of society. Clearly, I think we’re in a position now where people rightly have a lot of questions about big internet companies, Facebook in particular, and I do think getting to a point there there’s the right regulation and rules in place just provides a kind of societal guardrail framework where people can have confidence that, okay, these companies are operating within a framework that we’ve all agreed. That’s better than them just doing whatever they want. And I think that that would give people confidence. So, figuring out what that framework is, I think, is a really important thing. And I’m sure we’ll talk about that as it relates–

Jonathan Zittrain: Yes.

Mark Zuckerberg: –to a lot of the content areas today. But getting to that question of how do you– “Who determines what’s in people’s best interest, if not people themselves?”Jonathan Zittrain: Yes.

Mark Zuckerberg: –is a really interesting question.

Jonathan Zittrain: Yes, so, we should surely talk about that. So, on our a

source https://techcrunch.com/2019/02/20/zuckerberg-harvard-zittrain/

No comments:

Post a Comment